We believe that every individual should be able to make their own free and informed choices online. Amurabi is therefore committed to the fight against Dark Patterns.

"I accept". Who hasn't clicked the big green button without thinking, instead of the small grey links below? Worse, has anyone ever managed to unsubscribe without wasting time or losing their temper, when all it took was one click to "try for free"?

These interfaces leave nothing to chance. They play on our cognitive biases to influence or even manipulate us. The phenomenon of "dark patterns" or "deceptive patterns" is endemic and worldwide. The European Commission has shown that 97% of Europeans' favorite e-commerce sites contain at least one dark pattern. In the United States, a study of more than 11,000 sites identified more than 1,800 dark patterns.

The term "dark pattern" was first used in 2010 by Harry Brignull, UX designer and neuroscience PhD, to refer to "traps on sites or apps that make you act unintentionally, like buying or subscribing to something."

Many researchers have worked to define dark patterns, create taxonomies, i.e. classifications, and identify the legal grounds they violate and how to regulate them.

In general terms, we can say that a dark pattern is an interface that deceives or manipulates users to make them act without realizing or even contrary to their own interests.

Good to know

Many researchers prefer the term "deceptive patterns" to avoid any misunderstanding of a negative connotation associated with the term dark. Amurabi fully adheres to this precaution, but since the law mentions "dark patterns", we use this term for more clarity.

Faced with the risks that dark patterns pose to all users, and in particular more vulnerable users, calling into question our ability to make autonomous decisions online, our R&D Lab took on the problem in 2021. Thanks to BPI funding, we have created a platform dedicated to the fight against dark patterns by:

Identify if your site

or your app

contains

dark patterns

Create custom fair patterns

or buy them

on the shelf

Train yourself to spot them and avoid creating new ones, whether you are a designer, developer, marketer, product team

Some companies "bet" on the manipulation of the cognitive biases of their customers, instead of the quality of their products or services.

Kahneman, Nobel Prize winner in economics and an expert in cognitive psychology, has identified that our brains function in two main ways:

Fast, efficient and energy-saving thanks to the use of heuristics - quick and intuitive mental operations to make decisions "without thinking".

Fast, efficient and energy-saving thanks to the use of heuristics - quick and intuitive mental operations to make decisions "without thinking".

System 1 relies particularly on our cognitive biases, a kind of "mental shortcut" that is based on incomplete, irrational or distorted information and makes us "act without thinking". More than 180 cognitive biases have been identified

Default settings are against the interests of the user

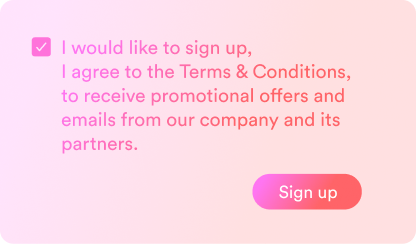

For example: pre-checked boxes, having to accept a set of parameters without being able to individualize, wording such as "Accept all" without the possibility to define.

Selective disclosure of information

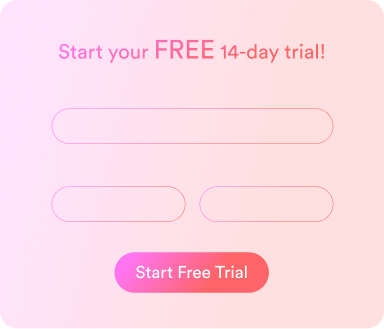

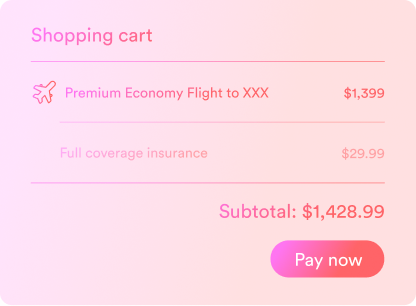

For example: sneaking into basket (adding products without user's action), hidden costs, forced continuity (automatic renewal after the free trial period), camouflage advertising.

Make users do and/or share more than they meant to

For example: Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua.

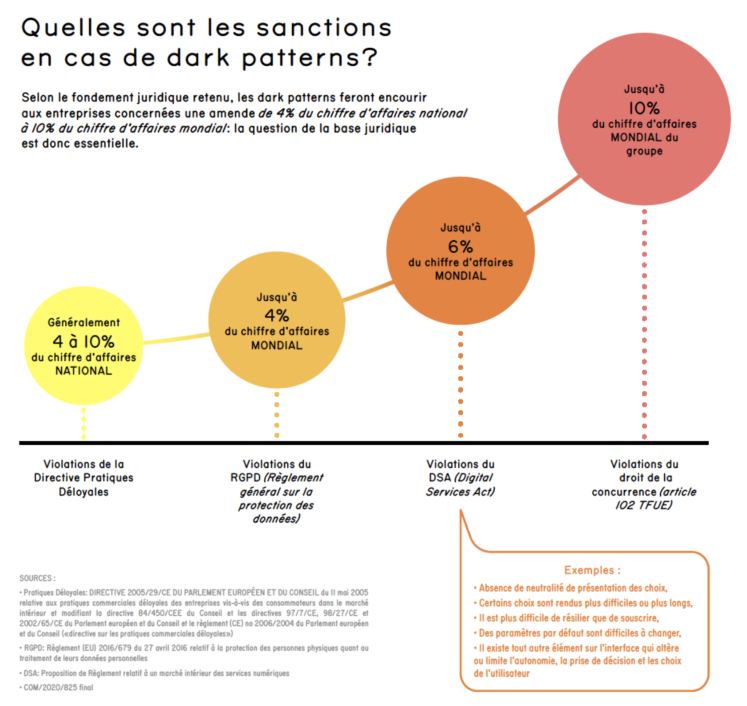

Regulators and legislators in Europe and around the world have evaluated the danger to confidence in the digital economy, and even in the market economy itself. Specific prohibitions are emerging, such as the Digital Market Act, in addition to the existing legislative arsenal in personal data, consumer and competition law.

For example, the European regulation on digital services ("DSA"), prohibits for the first time in Europe - expressly and in a binding text - "dark patterns". The DSA defines them as: a digital interface that "misleads, manipulates, alters or substantially limits the ability of users to make free and informed decisions" due to its "design, organization or the way it is operated."

This specific prohibition complements existing bases on which dark patterns can be prohibited, such as the GDPR, Unfair Practices Directive within the EU, Section 5 of the FTC Act in the United States, and competition law in Europe and the United States.

Every month, receive our newsletters with our tips and latest projects